Posted by Jack Crossfire |

Oct 30, 2012 @ 11:28 PM | 7,655 Views

A new project begins, to get Marcy 1 flying on autopilot from the tablet, using wifi. Wifi has had insurmountable glitches, but the monocopter is stable enough to handle a 1 second loss of signal. There's still a belief that these glitches must be somewhere in the software.

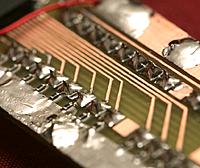

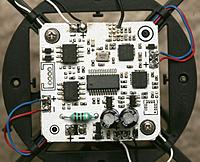

The new toner cartridge is producing a huge number of shorts. Anything 0.5mm apart is getting shorted. The toner is much finer than the original cartridge, but is also printing more whiskers.

The tablet has now been proven with object recognition, sadly for a classified project. It seems to run Java object recognition as fast as a 1Ghz AMD dual core running natively. A lot of that is minimizing indirection, copying everything to local variables, minimizing use of virtual functions.

Posted by Jack Crossfire |

Oct 25, 2012 @ 09:12 PM | 7,580 Views

So the leading method of recognizing objects in video is to chroma key the image & compare every blob, byte for byte with the object bitmap. That gives a very effective way to rank all the blobs in the image for similarity to the object, but doesn't say if no blob is the object. A blob can rank highest without being the object.

In the interest of debugging the chroma keying, ported the RT Jpeg decoder to the tablet, in Java. Running a JPEG decoder in Java was a bit crazy. There was a chance the JIT would be fast enough & any native code is going to be a nightmare.

Incredibly, there's no native code support in Android, no native driver interface, no native libraries. Outside the purely Java world, you're on your own.

The Java JPEG decoder managed to pull off a pretty decent frame rate. It definitely isn't as fast as C, but it's definitely being compiled instead of interpreted. For 320x240 video, it was surprisingly split evenly between 2 cores.

There aren't 2 equally demanding threads. Either it's splitting an individual thread or copying the bitmap to the screen is as demanding as decompressing it. It seems to try to keep all the threads on 1 core until it reaches 100% & it seems to power down the unused cores instead of constantly swapping cores.

The problem with chroma keying was immediately revealed in the form of a mostly red image & terrible color rendition. Manual white balance made it more red.

The need to calibrate it to make the ambient light neutral would make any chroma keying impractical. It would have to look at a neutral color & calibrate the white balance right after starting up, then lock it.

Color rendition isn't accurate enough to do a very good job. There's lots of moire.

Posted by Jack Crossfire |

Oct 24, 2012 @ 03:36 AM | 7,338 Views

After spending $100 on meters that broke for 15 years, it was time for something new. If this doesn't last forever, nothing will.

Posted by Jack Crossfire |

Oct 23, 2012 @ 08:58 AM | 7,301 Views

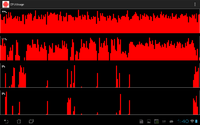

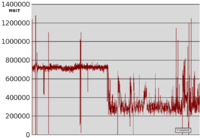

So a quad core CPU grapher was a great need. There are many single CPU meters, but no-one could manage to recreate the ages old windows task manager, in existence for all time.

It wasn't hard to write a CPU grapher. This revealed the recent app list does indeed kill apps & apps can indeed run at full speed in the background. Every app that wants to run in the background needs to create a static object that all the activities reference. The activities come & go, but the static object always exists until it's slid off the recent apps.

This is something X Plane misses, hence its 5 minute install operation has to be done in the foreground. Background execution allows the CPU grapher to profile another app. X Plane uses 100% on 1 CPU.

It's hard to believe a quad 500Mhz Xeon that fit in a cabinet was once coveted. Now the tablet has a quad 1.4Ghz ARM. It just runs slower than a 166Mhz Cyrix because it's Java.

Being a JIT, there was hope all the image processing could be done in Java. This doesn't seem likely. Once anything is done natively, there's no point in using Java anywhere.

Posted by Jack Crossfire |

Oct 22, 2012 @ 02:11 AM | 7,465 Views

It was the 1st night on the mountain in 7 years. The sky was a lot brighter. There were a lot more cars & buildings on the road. Forgotten experience caused 1 hour of footage to be lost to condensation. Wind does not prevent condensation, a fact the lion of days gone by would have known from recent, painful experience but the lion of today was completely unconscious of.

The past lion would also have known scotch tape was not enough to hold the lens heater on. Some emergency insulation with a sock & an extremely cold foot managed to minimize the condensation without the lens heater firmly attached.

Sadly, the intervalometer immediately fell over, thanks to the tiny switches flaking out in the cold. Back to the switch market with this one.

Snagged 6 in 3 hours of observing. Most of them happened between 2 & 3am.

The mountain was still extremely cold & windy, a fact not forgotten. The wind never ends, even at 2am, when the golf course is a tomb.

The introduction of a feature phone & GSM internet access was the 1st major introduction of civilization to the mountain, 7 years ago. Now, the internets were gone, thanks to inflation, but more civilization arrived in the form of a tablet with a flight simulator.

Sadly, X Plane on Android was extremely limited, with 1980's style featureless terrain. Can't imagine who would pay for those scenery files. Goog flight is now world's ahead, but not available on Android.

There weren't many meteors to see, thanks to the tablet, foggy lens, & wind. The newly brightly lit skies took away the romance & feeling of being in space that once was. Years of running have made the mountain more accessable & less remote.

The tree on the mountain was a bit skimpier. Today's observation was much farther from it than the past. Despite the tablet, equipment was minimal compared to those days. The peak years involved bringing food, a backpack, multiple lenses, & lots of camera mount parts. It's back to just a tripod & camera.

Posted by Jack Crossfire |

Oct 20, 2012 @ 06:42 AM | 7,418 Views

Would say Android was a respectable choice for development. The development tools are pretty refined. Eclipse is clunky & its text editor is awful, but the end result is much easier to obtain. Can't speak for the iOS development experience, of course.

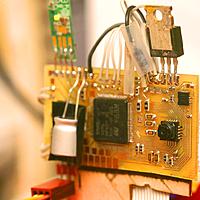

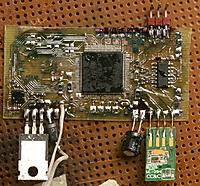

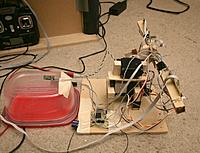

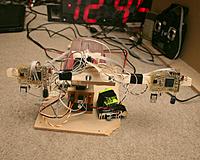

The 1st Android project was a novel way to control a ground rover. It's a really basic, boring interface, but controlling a robot from a tablet definitely feels high tech & futuristic. It only took a week & a half to bring it up, from fabricating the board, reverse engineering the vehicle, patching in all the hardware & writing the tablet application. A lot of that was reusing modules from other projects, but it's hard to think the development tools didn't greatly simplify it.

The Android browser is completely worthless & it feels like very high performance hardware being stuck behind extremely slow software, but it takes a lot less time to create something that feels like it's from the future.

You can either spend a lot of time making something really fast & tight or spend a little time making something really slow & clunky. For most needs, you're more interested in using the software rather than developing it. Any software has a limited lifespan. Platforms become obsolete. If it can't be ported & rewritten quickly, it becomes irrelevant.

It's certainly acceptable to make something irrelevant, very fast & optimized for 1 platform, but which no-one else can use. The reward from the platform support is replaced by the speed, but stuff written for Android can be much more easily ported to Windows & whatever new platform comes around.

Posted by Jack Crossfire |

Oct 19, 2012 @ 05:25 AM | 8,201 Views

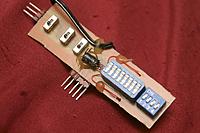

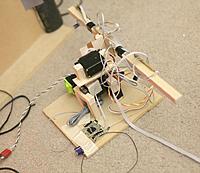

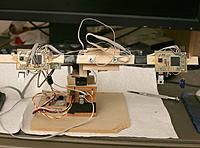

In preparation for the next meteor shower, the 3rd intervalometer was built. Using lessons learned from the last 2, it's supposed to be bulletproof & function in any environment. Dip switch programming is back. That was always guaranteed to work.

Wireless is gone. That was a train wreck. It would have to be done by a really reliable radio on another board, but the last one was so complicated & clumsy to set up, it never got used.

A coin cell would be nice, but the 350mAh LiPo was available. It should last for the next 30 years.

Posted by Jack Crossfire |

Oct 17, 2012 @ 05:11 PM | 8,534 Views

They all have been superior than the Weller, since they're shorter & replacement tips are easier to find. The Hakko needs 550F to do what the Kendal did at 480F. They may be calibrated differently. The Kendal still has a useful heat gun & it's about as compact as any heat gun with a detached compressor can get.

...Continue Reading

Posted by Jack Crossfire |

Oct 16, 2012 @ 02:10 AM | 8,002 Views

STILL don't understand the rationale behind Java. It still requires kludges to work around the speed limitations, like functions for exposing raw memory & now we live in a world of not just having to port between incompatible operating systems but rewriting in entirely different languages which can't be run on every operating system.

The idea all along was to always run Vicacopter natively & have a layer abstract just the Java user interface. But Vicacopter didn't become very complex. It's mane complexity is switching in different pieces of simpler code for different platforms.

Abstracting the Java user interface would be more complex than moving the entire ground segment to Java. Having the mane loop run on the native side, using native libraries for the USB, networking, calling Java functions by using the object dereferencing calls would be complex & slow.

Eclipse doesn't have any real support for C or JNI. The support it has is just syntax highlighting. Compilation has to be from the command line.

The flying segment already has assembly & C ports which must be maintained separately, because different hardware requires different languages. Realistically, Java must eventually run on iOs. Now that Steve Jobless is dead, Java will most certainly be supported on iOS.

The most complicated part is the C language waypoint interpreter. That needs to run in a subprocess. Getting a build which runs on a desktop & Android is going to be complex.

Posted by Jack Crossfire |

Oct 14, 2012 @ 07:25 PM | 7,782 Views

After a long fight, the Android finally accepted a connection to the embedded access point. The key is the access point needs to constantly send beacons or the tablet gives up & looks for another router. This scheme is how the tablet pulls off seamless roaming.

Broadcasting beacons is hard, since the embedded USB isn't full duplex. Currently, it relies on other beacons & packets from the tablet to trigger beacon sends.

The big question is now how to run the autopilot on the tablet. Should everything be rewritten in Java with just the image processing left in C? Should a simple GUI abstraction be in Java, with everything left in C?

When the autopilot began in 2007, there were no tablets & no-one knew what tablets would run. Now we have 3 incompatible frameworks. For all the pedantic correctness of Java, the horrible Android responsiveness make it the disaster in this nest of incompatibility. Having the initialization & main loop behind the JNI presents a fiendish problem.

How will the network & USB be accessed from the native layer?

Posted by Jack Crossfire |

Oct 13, 2012 @ 10:48 PM | 7,528 Views

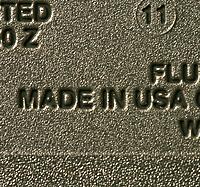

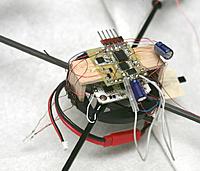

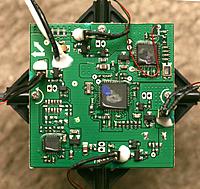

1st board to be fabricated completely submerged in flux. Sadly, it's a ground vehicle.

Posted by Jack Crossfire |

Oct 12, 2012 @ 10:13 PM | 7,608 Views

It arrived via UPS. Just need to find some room on the bench.

Posted by Jack Crossfire |

Oct 11, 2012 @ 05:00 PM | 8,018 Views

There are now 2 indoor quads in the apartment. 1 is a floor sample Syma X1, with an unknown number of crashes. The remaning Syma X1 isn't as stable as the 1st one. All the Syma X1's have a self leveling behavior, but the floor sample is less effective.

You can't have a viable UAV product without a tablet interface, so Android development begins. It's slow & clunky, but the standard if anyone is to use your software. You can't possibly consider it a competitor to iOS on usability. Its only advantage is iOS only being on 1 tablet & low price.

There are many memories of walking into a brick & mortar computer store, seeing shelf after shelf of cheap PC software, then a shelf in the back with Mac software at ridiculous prices. Andoid of course requires developing in Eclipse, which is also slow & loaded with bugs. Failures to reload projects abound, with reimporting usually necessary.

When it works, programming is more about knowing where to find things than solving a problem. The automatic suggestions are very useful in today's world of a new framework every 3 months, where you can't learn the nuances of every single framework.

Posted by Jack Crossfire |

Oct 09, 2012 @ 02:58 AM | 7,678 Views

So it turned out the servos interfered with wifi when the cameras pointed far left or far right, then tilted up or down. Orienting the tilt servo the right way seemed to do it. There were negligible variations in Vdd, so it must have been electromagnetic from the motor arcing.

Unless the camera was restricted to just the motions which didn't cause interference, Wifi ended up not having the bulletproof, super tight consistency needed to fly indoors. It was originally selected because vision was going to be done on the aircraft & there wasn't any knowledge of how unreliable it would be.

Pan/tilt mechanisms have always created the impression of a living being. They're usually tasked with something hopeless & covered in wires, giving the impression of something PETA would attack you for.

There are some musings about whether a traditional downward optical flow sensor with sonar would have done a better job.

Posted by Jack Crossfire |

Oct 07, 2012 @ 12:34 AM | 7,793 Views

That was hard. There is a growing library of programs which can be started in flight. Relative motion from 1 program to another still isn't possible.

The passive stability in the newly developed Syma X1 was also critical. Marcy 1 must be upgraded to fly with the new vision system. A monocopter flying itself with the lights on would be something.

Creating a marker by roughening the entire surface of an LED failed. It reduced the light output. It was a Radio Shack LED rated at 7000mcd. It was dimmer overall, so there may still be hope for roughened LED's. Roughening just the lens has already been found to be required. Not roughening anything doesn't work.

...Continue Reading

Posted by Jack Crossfire |

Oct 05, 2012 @ 11:41 PM | 8,729 Views

Test flying is still the least pleasant phase, even after 6 years of knocking out flying things. The system you put your heart & soul into building is beaten up & usually destroyed before it works.

Physics put up a huge fight. The IR sensor needed more stable power than the battery. The battery had huge troughs. It eventually ran off the Syma's 3.3V power rail with loads of capacitance while the magnetometer ran directly off the battery. The Syma's 3.3V power rail couldn't power any more than the LED.

A place for the LED which is always visible has yet to be found. The trend is to extend it more sideways. Ideally there would be springy wires which could snap it down after takeoff.

For the very slight bank angles encountered, the magnetometer alone seemed to be enough to get heading. The heading still had to be dead on to keep it inside the extremely confined room. It was finally time to write an automatic magnetometer calibrator. Though the calibration is ephemeral, the heading is now dead on when it's perfectly level.

The heading was the 1st of a new type of radio usage. The mag output is sent out the UART, which directly modulates the FSK pin instead of using the radio's FIFO. It would have saved a lot of pain to know the MRF49XA's FSK pin could be directly connected to the UART instead of banging on the FIFO registers, in 2009.

It's still very temperamental. The vision system needs to be right at the limit of its latency. Surprising that altitude is the least demanding direction to stabilize.

Posted by Jack Crossfire |

Oct 05, 2012 @ 05:00 AM | 8,043 Views

So after some more banging, the Syma finally took off, hovered, & landed all on autopilot. It was extremely stable, but needed nearly 100% pitch trim. The shadow of the 1st personal drone which could follow you around a building started to appear.

It finally proved the stereo vision concept. Haven't seen any other case of a stereo camera flying something, anywhere. Previously, they all used multiple cameras throughout the room or a single camera & multiple markers. The stereo camera is how a human flies, biologically inspired.

Vision needed a very precise servo PWM conversion to degrees. The ultimate vision system would give the exact camera direction from an IMU, for each frame. The PID limits had to be reduced to get horizontal position to stabilize.

So far, have learned the Syma has a calibration stage in which it needs to be very still to calibrate the gyros. This is the #1 reason it's sometimes unstable & probably why it needed so much pitch trim.

The agenda has a few more steps before any time can go into photographing it. The motors also have a very finite life.

It turned out the Syma has no accelerometer. There's absolutely no feedback if it's tilted in your hand.

It's actually mechanically stabilized, which is probably more effective than an accelerometer. An accelerometer searching for its perceived level point would never hit the exact equilibrium attitude the way the Syma is.

The mechanical stabilization also eliminates drift

...Continue Reading

Posted by Jack Crossfire |

Oct 04, 2012 @ 02:32 AM | 7,722 Views

Today's discovery was that wifi cards don't successfully arbitrate when 2 packets are sent simultaneously. There are resends, but the process is much slower than believed, causing packets to get delayed for many seconds.

The delusion about fast wifi arbitration led to the camera & aircraft sending packets simultaneously, which was causing massive network collisions. The delusion persisted, even after discovering wifi had to run in 54g mode to work at all.

After reordering the packets so the camera doesn't get triggered to send until the copter is done sending everything, the network recovered, but not completely.

It still fell over after 15 minutes, but since the downgrade was constant, it was probably a firmware bug or overheating more than network collisions. This was still using 54g mode, which has serious range degradation.

By this time, the process had already begun to shift from wifi to USB for the camera. That would be a very painful process, just like debugging the USB host was.

Vicon uses hard wired gigabit ethernet for its cameras. They probably get a huge reduction in latency, compared to wifi. They probably also do all the keying & a lot of object detection in the camera. Marcy 2 just does the keying in the camera.

Wifi doesn't have a noticeable effect on latency. In a subjective test, the left eye lagged slightly more than the right eye, but both had hardly any lag. Things would still be better if the next frame didn't have to arrive in order to discover the size of the previous frame.

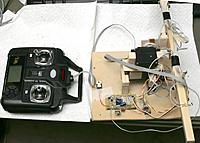

Finally, efforts to control the Syma by computer worked. It merely took a simple RC filter to convert PWM to constant voltage to emulate the sticks. A 10k & 1nF were good enough. The Syma remote control ended up taking linear voltage. The Syma also bases stick center position on the voltage when it powers up.

Adding the tracking LED either made it nose heavy or the stick input is now off balance.

Posted by Jack Crossfire |

Oct 03, 2012 @ 02:46 AM | 11,659 Views

After the disastrous Blade MQX, the Syma X1 was incredible. It has an accelerometer, giving it rock solid, level flight. It's the only thing to be reasonably flyable in the apartment, by a human. The transmitter is still a steaming pile of dog turd, but it's much more functional than the Blade. It has a convenient LCD, showing the control status.

It's hard to believe Horizon put out something as non functional as the Blade MQX unless they planned on releasing a later version with accelerometer to milk every last drop out of the customers. It's like they didn't really want to produce a quad, but put out a teaser to test the market.

The Syma was so stable, it was an immediate contender for the indoor drone prize. An initial test would ignore yaw & just see if machine vision could hover it. The weight limit would require it to be flown with the existing board & the transmitter hacked into.

After some heroic soldering, the stick voltages were once again logarithmic, like every other el cheapo remote control. Also, using PWM to synthesize the voltages was too noisy. The digital protocol was a well obfuscated SPI protocol. These issues would take some doing to resolve & a 2nd IMU would still be required to determine heading, making a custom flight computer more practical than hacking the transmitter.

Posted by Jack Crossfire |

Oct 02, 2012 @ 06:03 PM | 9,582 Views

After the Blade CX2, which had fairly capable electronics, the Blade MQX was a total steaming pile of dog turd. The mane problem is it has no accelerometer. It only has an ITG-3205, 3 axis gyro. It can't level itself like any other quad copter & is even more unstable than the Blade CX2.

The CX2 was eventually reasonably stable, once it was perfectly balanced & the servo pushrods were adjusted. It would stay level, while having a dynamic instability which a human could control.

The MQX is like a traditional copter, with no force leveling it. This explains why Horizon still sells the CX2. Making an autopilot would take a significant investment in a board which ran on 3.3 - 4.2V & fit under the weight limit.

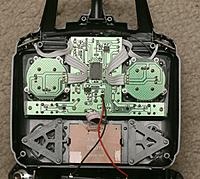

Finally, the MQX remote control is pure trash compared to the CX2 remote control. The CX2 was a stock transmitter for any other airplane while the MQX is cost minimized as much as possible for just the MQX. No tactile trim pots. No range of stick motion, despite requiring more stick motion than the CX2. Inside, the radio board is hard soldered to the mane board at an angle, to jam it in the remaning space.

The CX2 had discrete radio modules in the transmitter & receiver which could be reused somewhere else. The MQX has the radio chip soldered on the mane board.

Further testing after more tightly balancing the CX2 wasn't very different. Instead of changing attitude based on position, it now got direct cyclic input based on position & relied on balance to stay level, which seemed to give faster response. The coax should theoretically automatically level itself.

Response to movement was still too slow to keep it in the room. There's just too much latency in the vision system. In addition to the blades hitting each other, also found the swashplate on the CX2 tended to come apart in every crash. It snapped back together, but was a bit too loose.

Views: 237

Views: 237  Views: 188

Views: 188  Views: 181

Views: 181  Views: 187

Views: 187  Views: 197

Views: 197  Views: 189

Views: 189  Views: 205

Views: 205  Views: 201

Views: 201  Views: 333

Views: 333  Views: 258

Views: 258  Views: 300

Views: 300  Views: 291

Views: 291  Views: 274

Views: 274